Dancing to the same tune

Things may not become so hot after all

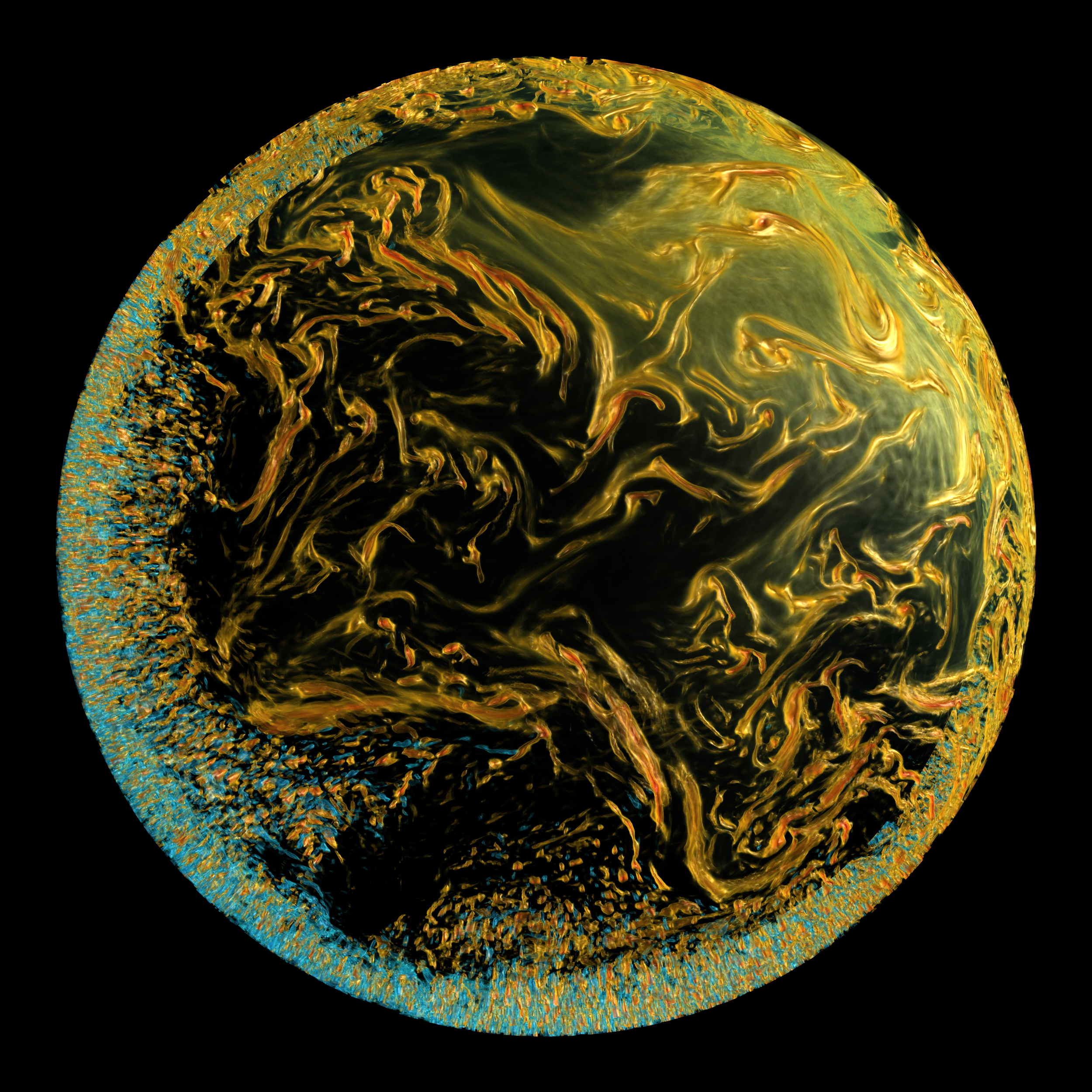

When scientists create simulations of the climate in their supercomputers, two of the most important model characteristics are the climate sensitivity – how much warming you get for a doubling of carbon dioxide – and the cloud–aerosol forcing, the cooling influence you get as a result of pollution affecting cloud formation.

Nowadays, the air is becoming cleaner than it was in the past, and in the future it will be cleaner still. That means that our future is largely governed by the climate sensitivity.

If you were one of those scientists who enjoyed stories of impending doom, you would obviously be hoping that your climate model gave you a nice big juicy estimate of climate sensitivity. But that hope has to be tempered by the realisation that the model's estimate of the cloud-aerosol forcing has to be strong enough to cool things down in your simulation of the 20th century so that the model matches observed reality.

In other words, if you have a big climate sensitivity you need a big cloud-aerosol forcing to make things look right in the 20th century. If you only have a low climate sensitivity, you need a small cloud-aerosol forcing.

Now climate sensitivity and cloud-aerosol forcing are not fed into climate models as assumptions - they emerge from them as outputs. It would therefore take someone more cynical than I to suggest that climate scientists might be tempted to tweak things so that just the right cloud-aerosol forcing emerged from their models in each case.

However, a new paper published in the journal Geophysical Research Letters makes it hard to avoid the conclusion that this is happening. The authors, led by Chenggong Wang of Princeton University, looked at the most recent generation of climate models, known as CMIP6, and found that lots of these models were rather good at simulating recent temperature history. Some suggested this had happened as a result of strong-warming offset by strong-cooling (we’ll call these the “strong models”), and some via weak warming and weak cooling (therefore, the “mild models”).

The authors’ most important scientific result is that the mild models seem better at reproducing the Earth’s recent temperature history. Most 20th century pollution was emitted in the north, so the balance between greenhouse gas warming and cloud-aerosol cooling has been different between the two hemispheres. This gives us an opportunity to assess whether the strong models are more realistic than the mild ones. That’s because the strong models give a much more pronounced difference between the hemispheres. Unfortunately for climate alarmists, Wang reckons that the observed difference is a much better match with the mild models. This means that predictions of a very hot future are much less plausible.

Amusingly, Wang and his colleagues imply that there seems to be a surprising match between the amount of cooling and heating in the overall model cohort. Lots of models seem to get just the right amount of cooling to correctly offset their heating and so are able to reproduce 20th century temperature history. This strongly suggests that climate scientists are “tuning” (more perjorative terms are frequently used) their models to make them look credible. That they do so is not a new revelation – papers have been written on the subject of tuning of climate models – but it does show us that scientists are still unable to create plausible simulations of the climate system “out of the box”.